A Small Introduction:

Terminology when talking about sexual images of underage persons is a relatively important part of our discussions. The terms “child pornography,” “child porn,” “kiddie porn” or “CP” are not appropriate terms for images of a crime in the midst of being committed against a living, breathing human victim. The term “pornography” serves to legitimize the production and existence of this material by implying it has a purpose and a usage beyond the abuse and exploitation of a human victim. “Pornography” in the context of photographs and video is meant to convey safe, sane, and consensual sex between adult human persons for the purpose of distribution to the public. The appropriate terms for criminal images of children are “Child Sexual Exploitation Material/Images” (CSEM) and “Child Sexual Abuse Material/Images” (CSAM). These terms are used exclusively when the material depicts a real life human child who has been exploited or abused and does not apply to images that are drawn (unless they are indistinguishable from photography and depict a real person) or material that is text-based.

Why This Matters:

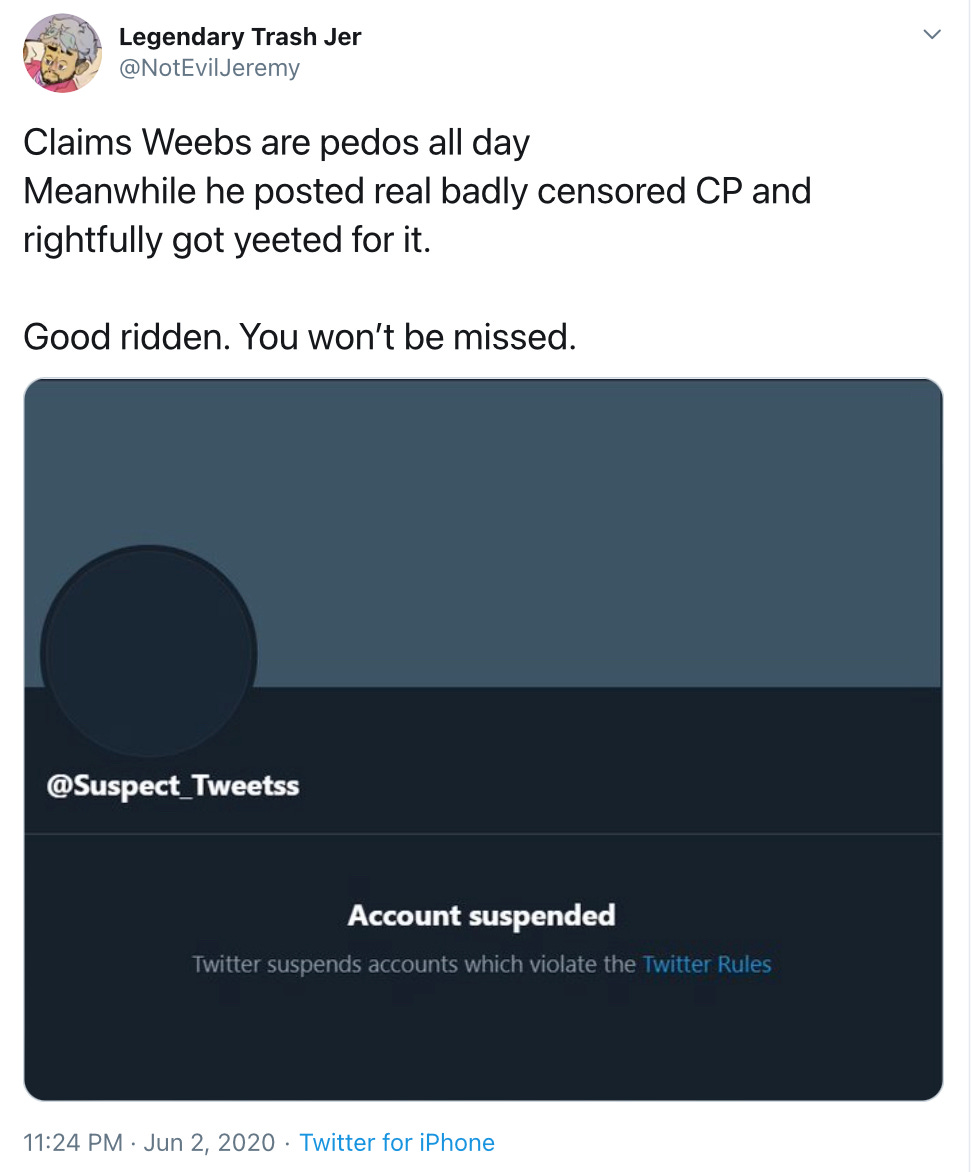

The internet has a long memory for some things and a short one for others. In June of 2020, something absolutely vile occurred among the many dozens of anti-shippers who had taken up the cause of fighting for the rights of fictional children during their down-time while COVID had them home. It’s fairly well-known that anti-shippers have utilized the term “child porn” and “CP” to refer to depictions of fictional characters in sexual scenarios if one of the characters is canonically underage (usually under 18) or if they just choose to “read” the character as underage (for example, if the character is young-looking). That June 2nd, a popular account, @Suspect_Tweetss, was suspended for retweeting poorly censored photographs of the sexual abuse of real life children (CSEM) in an attempted call-out. Due to the nature of the account and the tendency for the account owner to refer to images of drawn pornography as “CP,” many of their former followers then flocked to ask for evidence in the form of screencaps, some of them apparently unaware that in asking for screencaps, they were soliciting real criminal materials.

That this happened in the first place was a bit of a shock to the system for many Twitter users, utterly flabbergasted that anyone would be so dense as to proliferate criminal images of children. The excuse was that the account was trying to “raise awareness” but in reality, it seemed as though said criminal materials were being used to feed the clout machine—that is, the account was attempting to capitalize on sexual images of children for rhetorical gain. @Suspect_Tweetss downloaded illegal images of children in the process of being sexually abused and poorly censored those images only to re-upload them for likes, retweets, and follows on social media. Then, when their account was rightfully suspended, their former followers demanded to see the images, soliciting those who had taken screencaps of the material (now illegal images stored in their devices) to send it in direct messages. Many of these accounts were run by young teens or preteens who were entirely unaware that simply asking for these images is considered solicitation of CSEM and is considered a crime under the laws of most countries. These users were treating CSEM as though it were low-stakes drawn pornography of anime characters when it was in fact criminal photographs of real, living, human children who suffered for the creation of those images.

Though this may sound like it has to be the work of a nightmarish psychological operation gone awry, unfortunately it’s no such thing. It’s the natural end result of the conflation of perfectly legal fictional material and material that is evidence of a real life crime against an actual human being. The flippant manner in which this material was discussed and spread is exactly what professionals don’t want as it gives the impression that it is socially acceptable to further exploit the suffering of a child. Make no mistake, rhetorical gain is still gain, and uploading CSEM to a Twitter account for views, likes, and engagement is exploitation of the children depicted in the image.

The reason that fictional materials with fictional characters are not CSEM is due to the fact that there is no human being exploited in the creation of that material and there never will be—you cannot exploit a fictional child in any way that matters mentally or emotionally to that child because that child does not exist. The truly important distinction here is that there is direct harm being caused to a person in the creation of CSEM while the arguments against drawn pornography (of child-like characters) cite alleged indirect harm in the context of centering the viewer rather than the person depicted in the image. When @Suspect_Tweetss downloaded the image of real CSEM, they were centering themselves and their comfort over the harm being commited to the subject(s) featured in the image. “I can handle this so I will raise awareness of it” is purposefully ignoring the direct harm of the image while citing the possible indirect harm allegedly suffered by the possible viewer(s).

It’s high time that anti-shippers strike “CP” and anything related to “child porn” out of their lexicon. Usage of those terms is irresponsible and due to the internet’s relatively short memory for things like this, it’s possible that an incident like this will happen again due to their callous disregard. That anti-shippers do not actually know what to do when they come across actual Child Sexual Exploitation Material is an utter failure on the part of their natural social leaders who should have been—along with their passionate moral speeches on the purity of fictional children—coaching their followers on what to do if they find true criminal materials.

What To Do If You Find Accounts Sharing CSEM Online

Report to the National Center for Missing and Exploited Children’s Cyber Tipline:

Remember that many accounts will not blatantly share this material. They will use Twitter to advertise with coded language and will often share with what is called Peer-to-Peer as it is safer than uploading data online. Advertisements for these happen right out in the open with fresh Twitter pages that last sometimes as little as a few hours, using particular hashtags that can hide among legitimate OnlyFans adverts, popping up here and there and noticed only by those who are looking.

The double-edged sword of “raising awareness” for criminal activities online is obvious: while one may be “raising awareness” among the general public that it is being done, one is also providing the means to future CSEM collectors and distributors who are looking for successful ways to expand their current inventory. Revealing particular hashtags and methods of distribution does little to aide the general public as governments and law officials around the world are already entirely aware. The types of “attacks” anti-shippers stage against art accounts wouldn’t work against actual distributors of CSEM because mass-reporting their account actually makes it more difficult for officials to track and stage sting operations, not to mention oblivious reporters might actually be reporting a “plant” account meant to lure CSEM distributors.

Obfuscating what is or isn’t CSEM, clogging up tiplines with fictional children, and utilizing CSEM to feed the need for engagement on social media is ultimately the worst kind of “help” anyone has ever tried to give in the fight against the exploitation of children online. It’s time that anti-shippers took a long, hard look at what kind of “aide” they’re giving to the children and adults whose abuse has been and is currently circulated among predators online and alter their course accordingly. Instead of centering their own comfort, perhaps it is time they thought about someone else for once and actually learned how they can help rather than how loud they can whine.